In 2023, an expectant mother’s app suggested she might be pregnant—weeks before she told anyone. Her search history, calendar entries, and health data had silently “spoken.” That information was sold, anonymized, and resold until a targeted advertisement finally gave her secret away to a coworker. This isn’t a dystopian novel—it’s a sign of how AI systems, fed with our digital footprints, can make assumptions that spill into the real world. In a time where artificial intelligence promises personalized experiences, rapid diagnostics, and data-driven insight, a haunting question emerges: can we truly trust AI with our most intimate data?

I. The Nature of Trust in the Age of Algorithms 🤖🔒

Trust is no longer reserved for humans or institutions—it is increasingly being placed in code. AI systems are often "black boxes" whose inner workings are not fully understood even by their creators. Yet these systems are entrusted with health records, financial behaviors, biometrics, legal decisions, and surveillance data. But trust, in ethical and legal contexts, must be earned, not presumed.

What does it mean to trust an AI system with data?

It involves believing that:

-

The system won’t misuse the data.

-

The data won’t be accessed by unauthorized parties.

-

The AI won’t reinforce bias or discriminate.

-

There’s a recourse mechanism when things go wrong.

And herein lies the crux: in many modern AI applications, none of these assurances are guaranteed.

II. Ethical Dilemmas: When Convenience Meets Compromise ⚖️💡

Let’s examine three core ethical dilemmas at the intersection of AI and data privacy.

| Dilemma | Description | Real-Life Example |

|---|---|---|

| Consent vs. Comprehension | Users often “agree” to data collection without understanding how their data is used. | Facebook-Cambridge Analytica scandal: users unknowingly gave access to their friends' data. |

| Accuracy vs. Accountability | AI can make errors, yet there is no one to hold accountable. | Apple's credit algorithm in 2019 allegedly offered lower limits to women, even with better credit profiles. |

| Anonymity vs. Re-identification | Even anonymized data can often be de-anonymized through pattern recognition. | Netflix Prize Dataset de-anonymization: researchers identified users based on their ratings. |

These cases are not outliers—they are symptoms of deeper systemic issues.

III. Legal Labyrinths: Regulation in a Fast-Moving Landscape📜⚖️

Laws are trying to catch up with innovation, but the pace of AI development far exceeds the speed of legal reform. Below is a comparison of three key legal frameworks attempting to regulate data and AI:

| Regulation | Region | Key Strengths | Gaps |

|---|---|---|---|

| GDPR (General Data Protection Regulation) | European Union | Strong on consent, data rights, and penalties for violations | Weak on explainability in AI decisions |

| CCPA (California Consumer Privacy Act) | California, USA | Transparency and right to opt-out of data sale | Lacks federal coherence and limited enforceability |

| China's PIPL (Personal Information Protection Law) | China | Focused on data localization and government access control | Government surveillance exceptions reduce trust |

AI often transcends borders, but law does not. This leads to jurisdictional mismatches where companies forum-shop for the least restrictive environment.

IV. Case Study: Predictive Policing – Algorithmic Bias in Action 🚨⚖️

In several U.S. cities, predictive policing algorithms analyze crime data to determine where officers should be deployed. Ostensibly neutral, these systems use historical crime data—which is often deeply biased due to decades of over-policing in marginalized communities.

Impact: In 2016, a ProPublica investigation into the COMPAS algorithm found it was twice as likely to falsely flag Black defendants as future criminals compared to white defendants.

This raises hard questions:

-

Who is responsible for an AI’s decisions?

-

Can we use biased historical data without reproducing discrimination?

-

Should humans always be in the loop?

These are not technical problems alone—they’re ethical ones.

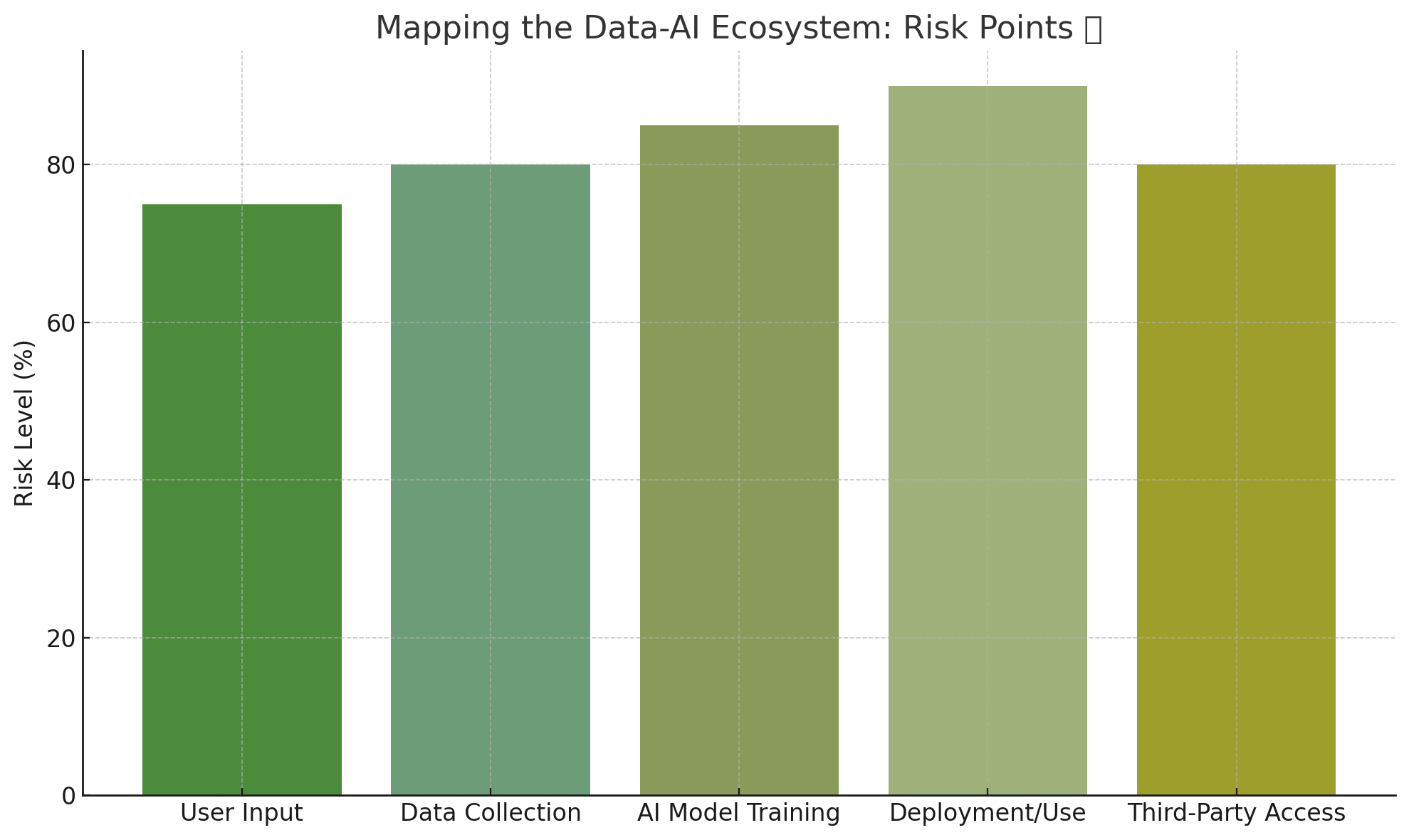

V. Architecture of Risk: Mapping the Data-AI Ecosystem🌐🔍

To trust AI with our data, we need to understand where the vulnerabilities lie:

[User Input] --> [Data Collection] --> [AI Model Training] --> [Deployment/Use] --> [Third-Party Access]

Every arrow represents a point where data can be mishandled, misunderstood, or misused. Ethical AI isn’t just about coding responsibly—it’s about designing accountability into every layer.

VI. Is "Ethical AI" an Oxymoron?🤔🧑💼

While many tech giants proclaim their commitment to “ethical AI,” critics argue that these frameworks are often toothless and voluntary. A recent study found that 80% of AI ethics boards had no power to influence product decisions. Ethical codes are often PR shields, not operational guardrails.

And yet, abandoning AI is not an option. Its benefits in medicine, education, and accessibility are transformative. The real challenge is learning to govern and constrain AI without stifling innovation.

VII. Navigating the Grey Zone: Paths Forward🛤️🌟

We must resist binary thinking. The question isn’t simply “Can we trust AI with our data?” but rather:

-

Under what conditions can trust be justified?

-

What guardrails are necessary—and who builds them?

Here’s a proposed framework:

| Principle | Implementation |

|---|---|

| Transparency | Mandate explainable AI, open logs, and decision audits |

| Data Sovereignty | Give users full control over their personal data, including deletion |

| Independent Oversight | Create regulatory bodies with technical and ethical expertise |

| Inclusive Design | Ensure diverse teams train and evaluate AI systems |

| Legal Recourse | Enshrine the right to challenge algorithmic decisions in court |

Final Thoughts: Trust Is Built, Not Assumed🔑🤝

AI systems do not lie, cheat, or steal—but the humans behind them can. Algorithms reflect the values, incentives, and blind spots of those who design and deploy them. Trust in AI is therefore not a matter of faith, but of design, law, and vigilance.

There may never be a world where AI is perfectly ethical or fair—but if we treat these systems as tools to be governed, not oracles to be obeyed, we might find a path forward that honors both innovation and integrity.