Imagine a world where police departments don’t just respond to crimes—but try to prevent them before they happen. In many cities, this is no longer fiction. It’s the logic behind predictive policing—the use of data, algorithms, and historical crime patterns to forecast where and when crimes are likely to occur, and sometimes even who is most likely to commit them.

At first glance, this may sound like efficiency in action. Fewer crimes. Smarter resource use. Safer neighborhoods. But beneath that promise lies a tangle of ethical, legal, and social dilemmas: What happens when biased data produces biased predictions? When a person becomes a target based not on actions, but on statistical correlations? When a neighborhood is over-policed not because of present behavior, but past patterns?

Predictive policing forces us to ask: Can we delegate justice to algorithms? And if we do, who gets to define what “justice” looks like?

🔍 How Predictive Policing Works

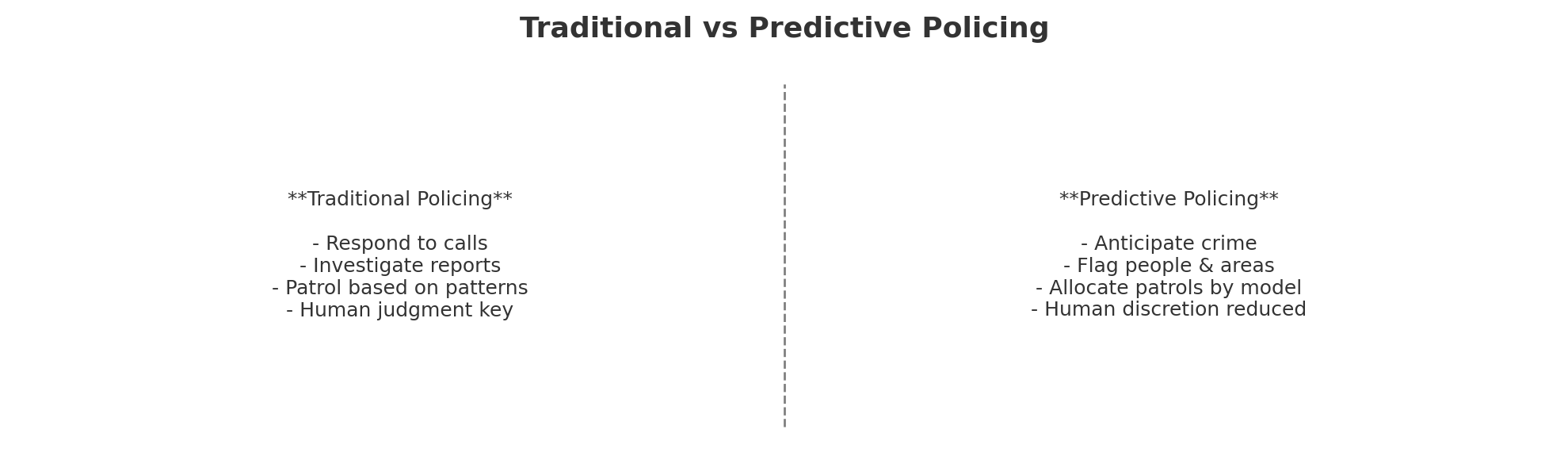

Predictive policing systems use historical data—arrest records, crime reports, location data—to forecast future risks. These predictions typically fall into two types:

-

Place-based predictions: Where is a crime likely to occur?

-

Person-based predictions: Who is likely to commit or be a victim of crime?

Common tools include:

-

HunchLab, PredPol, CompStat: Geographic hot spot analysis

-

Chicago's “Heat List”: A ranked list of individuals flagged as high-risk

-

Facial recognition integrations: Used to track movements or identify suspects in flagged zones

But data is not neutral. If certain communities were historically over-policed, then more arrests happened there—not necessarily more crime. Feeding that data into a predictive model can amplify the same biases, creating a feedback loop.

⚖️ Ethical Dilemmas: Prediction vs. Presumption

Predictive policing raises fundamental moral and legal questions:

❗ Presumption of Innocence

Can someone be flagged for police attention without doing anything wrong? This directly challenges the legal principle that one is innocent until proven guilty.

❗ Profiling and Discrimination

If the system disproportionately flags individuals from certain racial or socioeconomic backgrounds, it effectively automates profiling, even if the algorithm was not designed to do so.

❗ Lack of Transparency

Proprietary algorithms often lack explainability. Defendants and even judges may not understand how risk scores are calculated—yet those scores may influence sentencing or bail decisions.

❗ Consent and Accountability

Who owns the data? Who is responsible when an algorithm leads to a wrongful stop, arrest, or surveillance escalation? The accountability chain becomes blurry.

🧩 Real-World Example: The “Heat List” in Chicago

In 2013, the Chicago Police Department developed a secretive list of individuals it deemed likely to be involved in gun violence, based on arrest history, social networks, and other metrics. Many on the list were unaware of their inclusion, had no history of violence, and were subject to increased surveillance.

A later audit revealed that the system did not significantly reduce crime—but it did raise community distrust and civil rights concerns.

🧾 Conclusion: Policing at the Edge of Justice and Code

Predictive policing sits at a fragile crossroads: efficiency vs fairness, prevention vs profiling, data-driven action vs human rights. It promises to reduce crime—but risks turning entire neighborhoods into suspects, and statistics into handcuffs.

The path forward isn’t to ban all algorithms—but to ensure that their use in public safety is transparent, accountable, and just. That means:

-

Independent audits of bias

-

Clear oversight frameworks

-

Public visibility into how tools are used

-

Legal protections for the flagged

Because justice—whether human or algorithmic—must remain accountable to the people it serves.