Artificial Intelligence was supposed to be our impartial partner—a neutral engine of logic and efficiency. Instead, it’s beginning to mirror something deeply human: bias. When an algorithm decides who gets a loan, a job interview, or even parole, the stakes are high. But what happens when that algorithm has learned from biased historical data? Or when the design choices baked into the system amplify inequality?

In recent years, numerous cases have shown that AI systems can discriminate based on race, gender, age, or geography, often unintentionally—but with real-world consequences. And because these systems are often opaque and complex, bias can go undetected or unchallenged for years.

AI bias is not just a technical glitch—it’s an ethical and legal dilemma that forces us to ask: Who gets to define fairness? And how do we hold machines accountable when their decisions feel objective but aren’t?

🤖 How AI Bias Happens

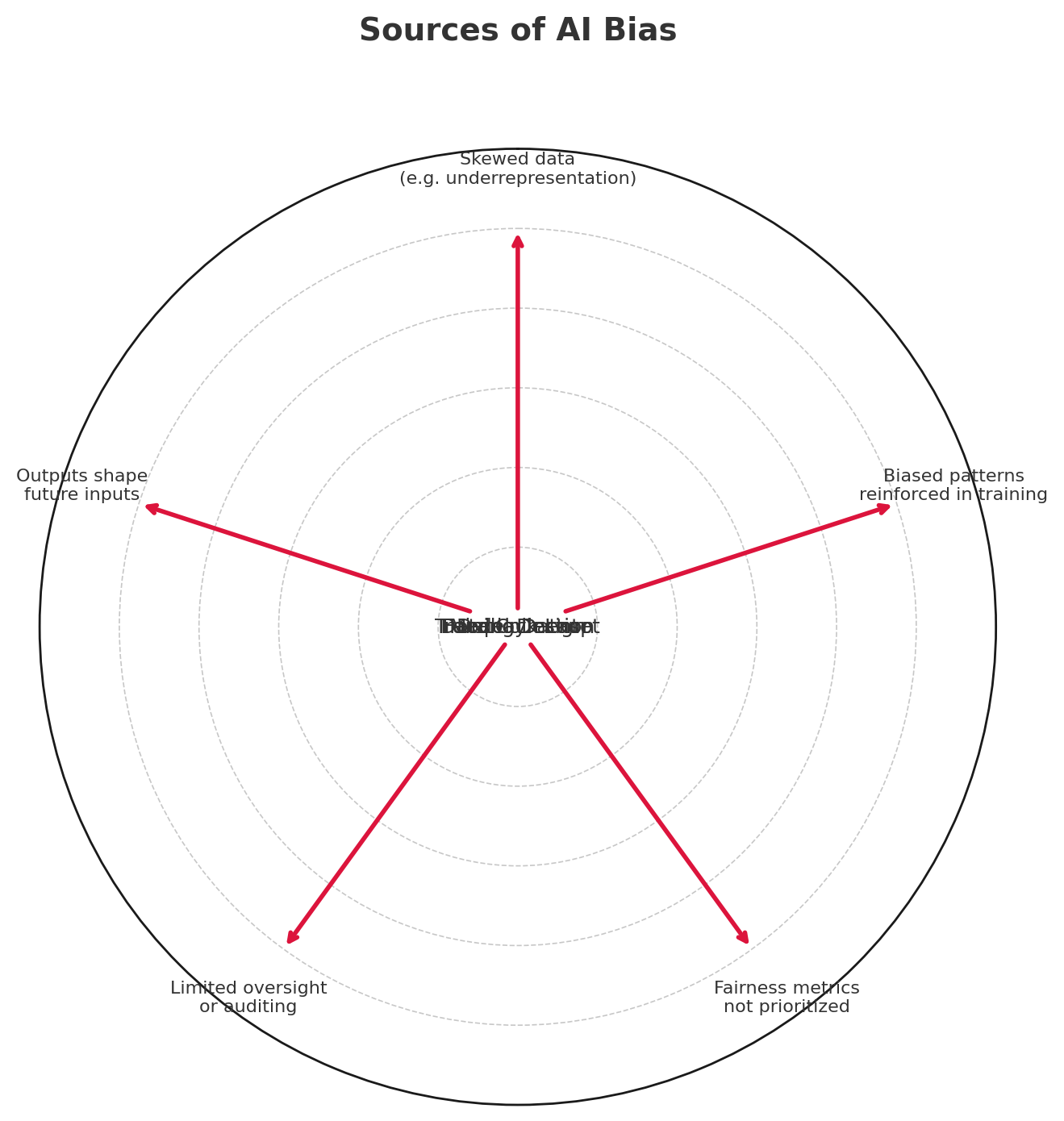

AI bias usually stems from one of these sources:

-

Biased training data: If historical hiring practices favored men, an AI trained on those résumés may favor men, too.

-

Unrepresentative datasets: Facial recognition systems trained mostly on light-skinned faces perform worse on people of color.

-

Design choices: Developers may unknowingly encode assumptions or fail to define “fairness” correctly in the algorithm.

-

Feedback loops: Biased predictions reinforce themselves over time, as the system optimizes for past outcomes.

⚠️ Real-World Consequences: When Bias Isn’t Abstract

AI bias is not a theory—it’s a pattern with documented consequences:

📌 Case 1: Amazon’s Hiring Tool

Amazon scrapped an internal AI that was trained on 10 years of hiring data—but it downgraded resumes that included the word “women’s,” as in “women’s chess club,” reflecting past male-dominated hiring patterns.

📌 Case 2: COMPAS and Criminal Sentencing

A risk assessment tool used in U.S. courts was found to assign higher “risk” scores to Black defendants compared to white ones, even when their records were similar.

📌 Case 3: Facial Recognition in Law Enforcement

Studies by MIT showed that some facial recognition systems misidentified darker-skinned women up to 35% more than white men. These tools have been used in arrests—with serious implications.

🧩 Why Fixing It Isn’t Simple

Eliminating bias in AI isn’t like patching a bug. It involves:

-

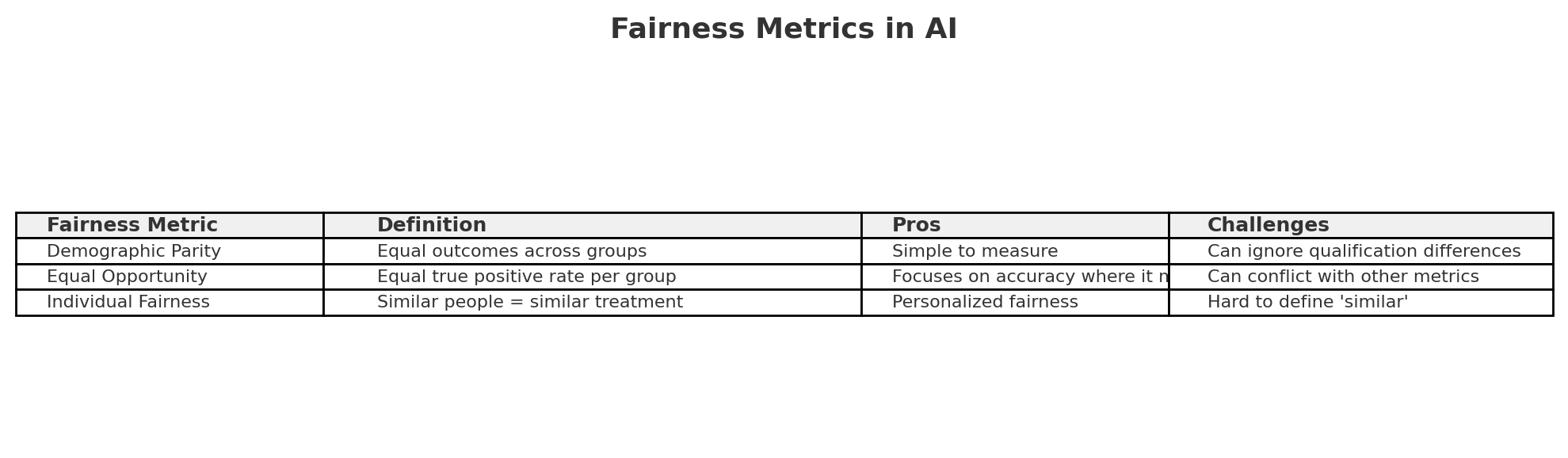

Philosophical questions: What’s a “fair” outcome? Equal accuracy across groups? Or equal opportunity?

-

Technical complexity: Metrics for fairness (e.g. demographic parity vs. equal opportunity) can contradict each other.

-

Legal uncertainty: Few clear laws govern algorithmic discrimination, especially globally.

-

Transparency limits: Many AI systems are black boxes—even developers don’t fully understand their outputs.

🧾 Conclusion: Algorithms Aren’t Neutral—and Neither Are We

AI systems don’t create bias—they absorb and amplify it. The challenge isn’t just technical; it’s deeply human. We have to decide what fairness means, who gets to define it, and how we audit machines that make life-changing decisions.

To build better AI, we need not just better code—but better conversations between developers, ethicists, lawmakers, and communities. Because when bias is embedded into algorithms, the cost is invisible—but the consequences are real.