Every like, scroll, search, and pause online is tracked, analyzed, and often sold. You might think you’re simply browsing or chatting—but behind the screen, your behavior is being mined like digital gold. In our hyperconnected world, surveillance capitalism has become the engine of the modern Internet: an economic model that monetizes your personal data for prediction and control.

Originally framed by Harvard professor Shoshana Zuboff, the term describes a system in which companies harvest behavioral data to forecast—and influence—what we’ll do next. It’s not just about ads. It’s about power. But as platforms become more embedded in our lives, the ethical and legal dilemmas grow: Where is the line between personalization and manipulation? Between convenience and coercion?

This article explores the depth and complexity of surveillance capitalism, using real-world cases, ethical conflicts, and visual frameworks to unpack what it means to live in an economy where the most valuable product is you.

🧠 What Is Surveillance Capitalism?

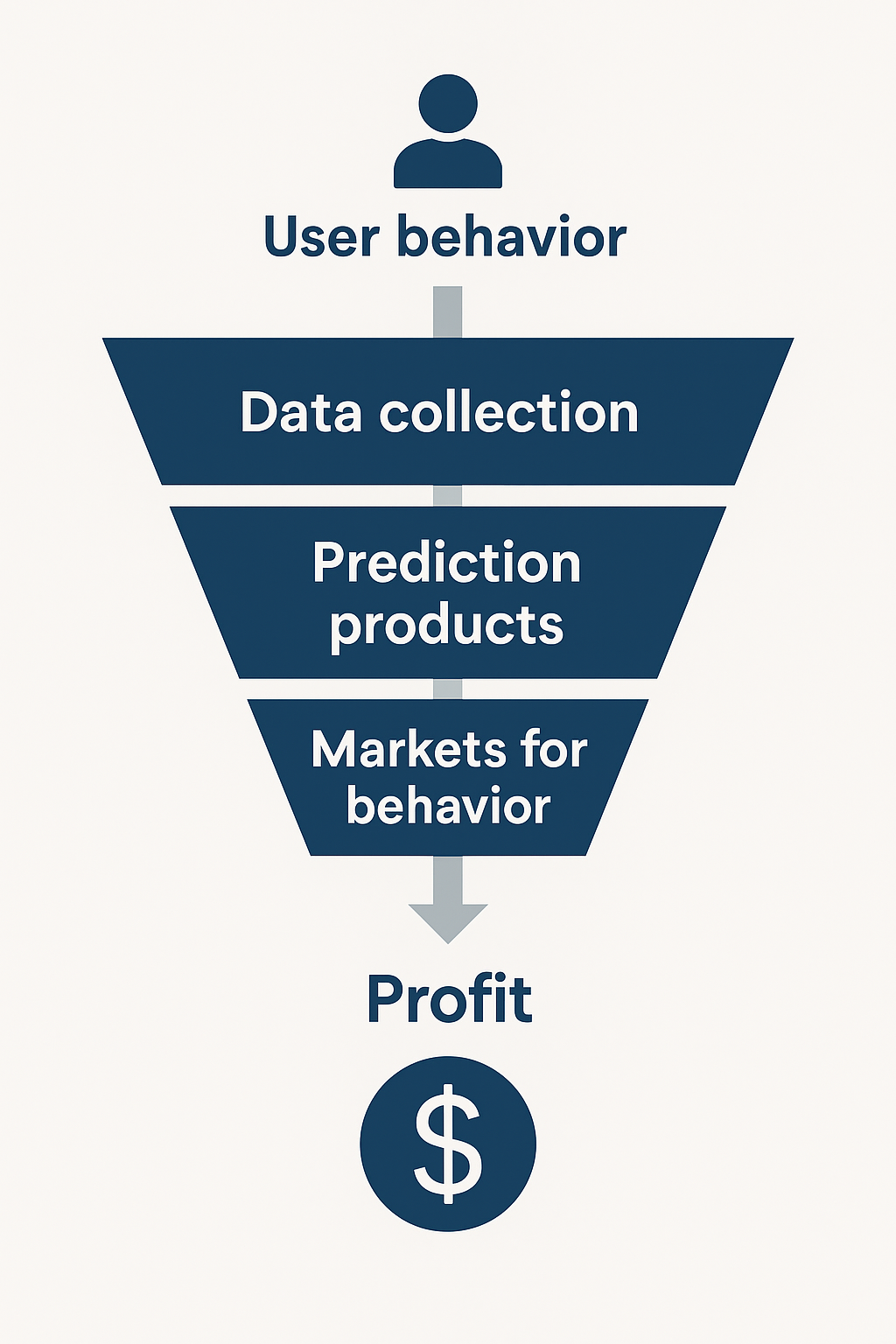

Surveillance capitalism refers to a business model where user data—often collected without explicit consent—is used not just to serve ads but to shape and steer behavior for economic gain.

-

It begins with data extraction.

-

That data feeds machine learning models.

-

The predictions are sold to advertisers, governments, and even hedge funds.

-

Ultimately, this creates systems designed to nudge, not just observe.

🧩 Real-World Examples: Cases That Cross the Line

🔒 Case 1: Facebook–Cambridge Analytica (2018)

Millions of Facebook profiles were harvested through a personality quiz app and used to micro-target political ads—without user consent. The fallout was global. It showed how personal data can be weaponized to manipulate elections, not just sell products.

🛍️ Case 2: Amazon’s Predictive Policing

In several cities, Amazon’s facial recognition tech was used by police departments to identify suspects. But the accuracy varied by race, and the public was not informed. This created an ethical storm about consent, bias, and corporate power in public life.

⚖️ Legal Vacuum: The Data Laws That Don’t Exist (Yet)

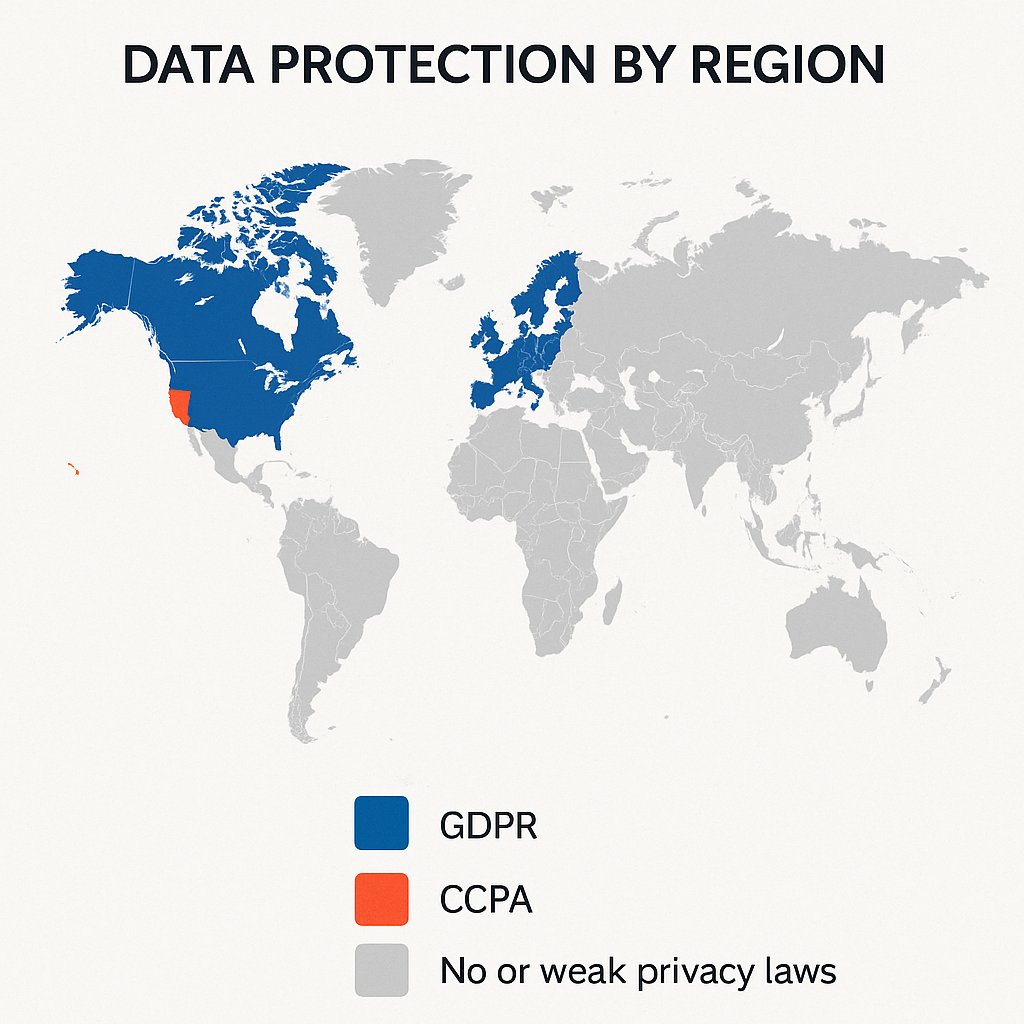

There is no universal digital rights framework. Instead, there’s a patchwork of laws:

-

Europe's GDPR (General Data Protection Regulation) enforces transparency and consent.

-

California's CCPA gives limited opt-out power to users.

-

In most other countries? No real protection exists.

🤖 Ethical Dilemmas: Personalization vs. Manipulation

On the surface, targeted ads and personalized feeds seem harmless—even helpful. But under the hood, algorithms often prioritize engagement over well-being. That means:

-

Outrage spreads faster than truth.

-

You’re shown what keeps you scrolling—not what’s good for you.

-

Systems learn to exploit psychological vulnerabilities.

This is not just algorithmic efficiency. It’s a revenue model based on behavioral engineering.

📉 When Prediction Becomes Control

As systems evolve, they’re not just predicting what we’ll do. They’re nudging us toward doing it. From the Netflix queue to political radicalization, the line between forecasting and influence blurs.

This raises serious ethical questions:

-

Can we meaningfully consent to something we don't understand?

-

Who is accountable when algorithms shape our reality?

🛠️ Can We Build an Alternative?

Yes—but it’s difficult.

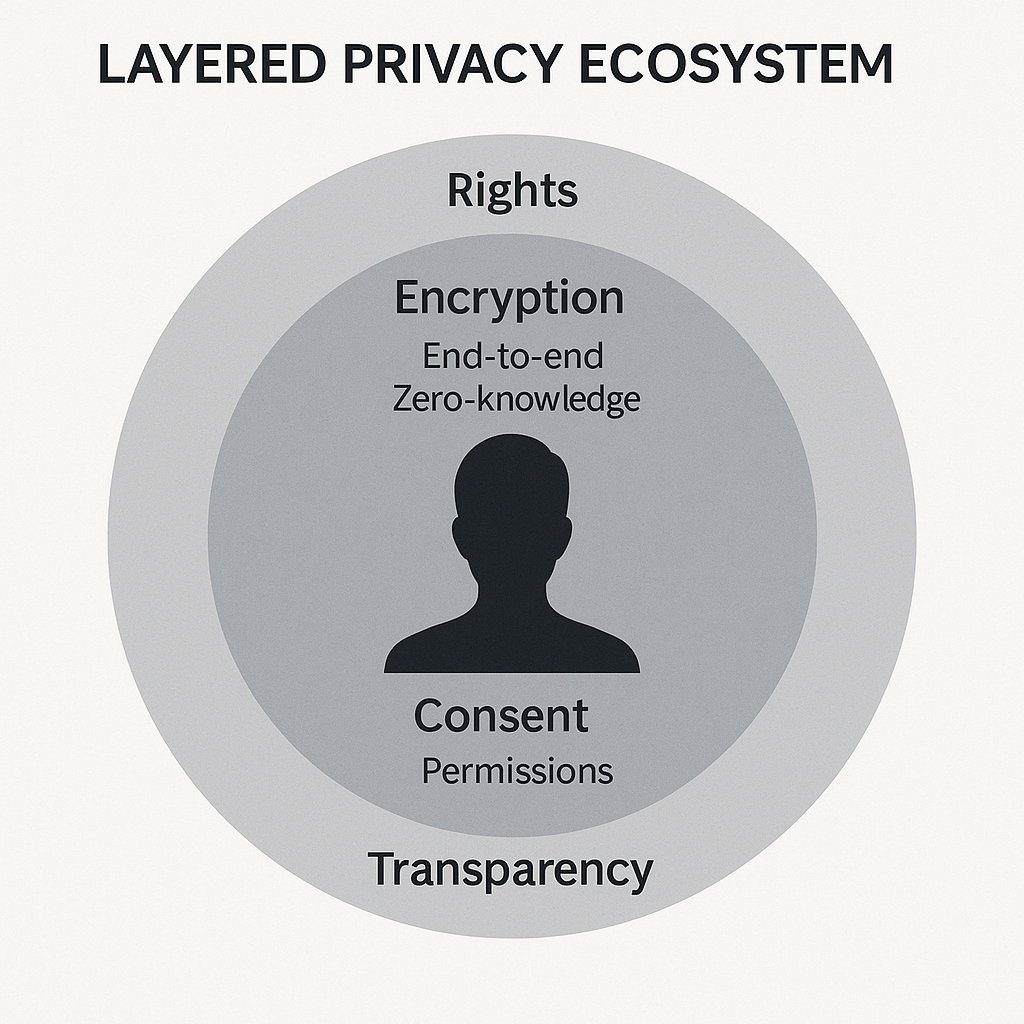

Some proposed solutions:

-

Data trusts that give communities control over shared data.

-

Privacy-first platforms like Signal or Brave that reject surveillance business models.

-

Legally enforced transparency in algorithmic decision-making.

🧾 Final Reflection: What Kind of Internet Do We Want?

Surveillance capitalism is not just a business model—it’s a political and ethical paradigm. It redefines relationships between citizens, corporations, and states. While some may argue that users “trade privacy for convenience,” the reality is that most people never truly consented to this trade in the first place.

The challenge ahead isn’t to reject technology—but to demand a version of it that respects autonomy, transparency, and dignity. This means building new rules, new tools, and new cultures that place human agency at the center—not behind a paywall or buried in terms of service.