From hospitals hit by ransomware to deepfakes impersonating CEOs, the cybersecurity landscape in 2024 feels less like a battleground and more like a permanent state of siege. As we digitize more of our lives—finance, health, identity, infrastructure—the line between “online” and “real life” disappears. But with this integration comes exposure. And that exposure isn’t just technical—it’s deeply ethical, legal, and human.

Cybersecurity today is not merely about protecting data. It’s about protecting trust, autonomy, and safety in an increasingly unpredictable digital world. What happens when algorithms can be hacked? When identity can be forged at scale? When attacks go beyond theft to coercion or manipulation?

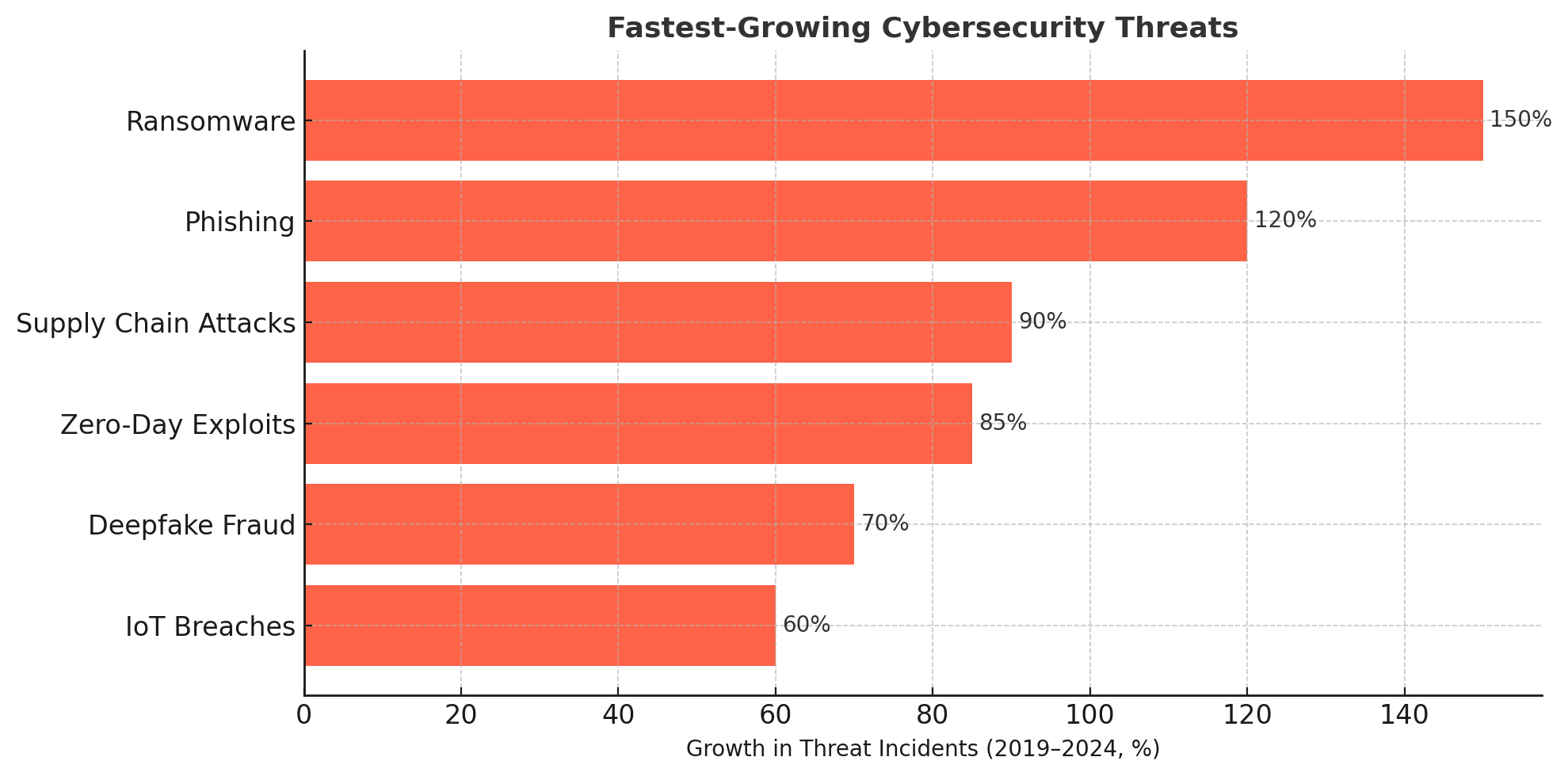

This article explores the major cybersecurity trends shaping this new reality—and why no easy solution exists.

🧬 Trend #1: Deepfake Fraud and Identity Manipulation

One of the most unsettling developments is the rise of AI-generated fraud—from impersonated voices to manipulated video. Attackers no longer need to break into your system—they just need to trick someone who trusts you.

🎭 Ethical dilemma:

-

If a deepfake impersonates a CEO and tricks an employee into wiring $1M, who’s at fault?

-

How can platforms moderate synthetic media without infringing on expression?

🧪 Trend #2: Ransomware as a Business Model

No longer isolated to lone hackers, ransomware has become industrialized, with entire supply chains for malware kits, negotiation services, and profit-sharing.

-

Hospitals, schools, and public infrastructure are prime targets

-

Insurance companies often pay ransoms, which raises questions of moral hazard

-

Governments are beginning to outlaw ransom payments—but that may worsen consequences for victims

🧷 Trend #3: Legal Grey Zones in Cross-Border Attacks

Cyberattacks rarely respect geography, but laws do.

🌍 The dilemma:

-

A ransomware group in country A hacks a utility in country B

-

Country C hosts the cloud infrastructure unintentionally

-

Who is responsible? Which law applies? Can prosecution even happen?

The result: Many attacks occur in a legal fog where justice is difficult, and deterrence is weak.

🧠 Trend #4: AI and Cyber Defense Arms Race

Just as attackers use AI to generate phishing emails or map systems, defenders are using AI to detect anomalies, predict intrusions, and automate response.

🤖 But:

-

AI systems themselves can be manipulated (e.g. adversarial attacks)

-

AI auditing is still in its infancy—how do we ensure fairness in automated defense systems?

-

Should AI-driven decisions about human behavior (e.g. suspicious logins) require human oversight?

🏛️ Trend #5: Cybersecurity Meets Human Rights

As digital threats rise, so do calls for zero-trust architectures, biometric authentication, and massive surveillance. But these raise serious ethical concerns:

-

Where do we draw the line between safety and privacy?

-

Who has access to biometric data—and can it ever be deleted?

-

Will cybersecurity become an excuse for overreach?

Laws like GDPR and CCPA address some of this, but not all. And in authoritarian regimes, cybersecurity is often weaponized to justify control.

⚖️ Toward Ethical Cybersecurity

To navigate this terrain, we need more than better tools—we need better governance, transparency, and ethics:

-

Privacy-by-design must become a baseline

-

Open disclosure of breaches should be incentivized, not punished

-

Public-private cooperation must evolve from PR to policy

-

Cyber-literacy should be treated as a civic skill—not just IT training

🧾 Conclusion: No Silver Bullets, Only Smarter Shields

Cybersecurity is no longer a backend issue—it’s a frontline question of social trust, democratic resilience, and global stability. As threats grow more intelligent, interconnected, and opaque, defending against them will require not just technology, but ethical clarity, legal frameworks, and public awareness.

There is no firewall for the human factor. But there is hope—in how we choose to build, legislate, and educate for a safer digital future.

📰 Recent Developments & My Perspective

Here are some of the most relevant recent incidents, trends, and emerging solutions in cybersecurity (2024–2025), followed by my view as the author.

🔍 Recent Incidents & Trends

-

Mass deepfake / voice scams surge

In Q1 2025 alone, documented financial losses from deepfake-enabled fraud exceeded $200 million.

Deepfake files are reported to have grown from ~500,000 in 2023 to ~8 million in 2025.

AI fraud attempts are rising ~19% in 2025 vs 2024 totals. -

$25M deepfake scam case

A UK engineering firm, Arup, was defrauded of $25 million after attackers used a deepfake video call impersonating its executives.

There are reports that such deepfake attacks are no longer rare stunts but emerging organized crime tools. -

Healthcare & hospital cyberattacks intensify

Frederick Health Hospital was hit by ransomware, forcing diversion of ambulances and disruption of services.

The U.S. kidney dialysis provider DaVita was breached in a ransomware attack, affecting ~2.7 million people.

Multiple health systems in the first half of 2025 have reported major breaches affecting hundreds of thousands of individuals.

Change Healthcare’s 2024 cyberattack exposed how vulnerable critical third-party providers are, with cascading effects across medical systems. -

Law enforcement pushes back on ransomware groups

The U.S. DOJ announced disruption of the BlackSuit / Royal ransomware group, taking down domains and servers.

In the U.K., the government is proposing bans on ransom payments by public sector / critical infrastructure entities. -

New technical defenses to combat synthetic media

Researchers proposed WaveVerify, an audio watermarking scheme for authenticating voice and detecting manipulated media.

A GAN-based approach to detect AI deepfakes in payment imagery has been shown to distinguish manipulated from real transactions with very high accuracy (>95%).

In India, Vastav AI, a cloud deepfake detection service, launched to detect AI-altered videos, images, and audio in real time. -

Regulatory action targeted at deepfakes

In the U.S., the TAKE IT DOWN Act was signed into law (May 2025). It mandates removal of nonconsensual intimate deepfake content from websites and platforms.

🧠 My Perspective & What I Predict (Subjective)

As the author, here’s what I believe is unfolding — and where we are headed, for better and worse.

-

The era of synthetic attacks has arrived. Deepfake fraud, identity manipulation, and synthetic media-based attacks are no longer fringe playthings but core threat vectors. In many ways, they are more insidious—easy, low-cost, scalable. The examples above confirm it’s no longer a threat of the future — it’s unfolding now.

-

Trust is the battleground now. When your senses (seeing, hearing) can be fooled, the first defense is often skepticism. But not everyone has the tools or training to distinguish truth from synthetic. The erosion of trust will continue, especially in institutions, media, and “official” communication.

-

Defenders are playing catch-up. While watermarking schemes, GAN detection, and forensic tools are promising, they lag behind the creativity and speed of attackers. Detection models trained on older synthetic examples often fail on new ones.

Security architecture must evolve from “find the intrusion” to “verify every transaction, media, identity claim” — zero trust extended to identity itself. -

Legal & regulatory structures are cracking under pressure. The TAKE IT DOWN Act is a meaningful step for deepfake content, but it addresses only one narrow area. Jurisdictional complexity, cross-border attacks, weak enforcement, and loopholes remain massive obstacles. I expect more nations to adopt similar laws—some more draconian than others—and for debates over encryption, backdoors, and surveillance to intensify.

-

Healthcare systems are fragile soft targets. As more health systems rely on digital operations and third-party providers, any weakness cascades into human harm. The NHS pathology hack linked to a patient death is not hyperbole—it demonstrates that cyberattacks can kill.

I believe hospitals and healthcare groups will continue to be high-value targets, but we’ll see more lawsuits, regulatory inquiries, and demands for “cyber safety compliance” from accreditation bodies. -

A “Trust Officer” role may become essential. Some organizations are already hiring a Chief Trust Officer to proactively repair integrity, manage incident disclosure, and link ethical oversight with security.

I think this role may expand — but success will depend on genuine accountability, not symbolic titles. -

In the next 5–10 years, synthetic attacks may morph into coercive tools. Already we see phishing, financial fraud, identity theft. But what about political manipulation, synthetic disinformation, automated deepfake blackmail, or forced confessions? The potential for coercion is real. We must guard not just data, but human will.

-

Public resilience is lacking but essential. Many people are ill-equipped to assess trust in media or identity claims. Education campaigns, “deepfake literacy,” and tools built into platforms (warning flags, provenance signals) will be vital.

-

But there is hope. The rising pace of detection research, combined with legal action and corporate accountability, suggests we are entering a phase of defense consolidation. The arms race will continue, but defenders are no longer starting from zero.

In short: 2024–2025 is not the calm before the storm — it's the storm itself. The scale and sophistication of attacks are accelerating. The difference between dystopia and resilience will come down to whether we can reclaim trust as a lived reality — not just a slogan.