Summary

Modern systems generate more data, variables, and interactions than any human team can reliably process. Artificial intelligence excels not because it is “smarter,” but because it is structurally better suited to manage complexity at scale. This article explains where humans struggle, how AI models handle complex environments more effectively, and how organizations can combine both for superior outcomes.

Overview: What “Complexity” Really Means

Complexity is not just “many tasks.” It is the interaction of many variables, uncertainty, feedback loops, and time pressure.

Humans are excellent at intuition, context, and ethics. But when decisions involve thousands of signals updating in real time, cognitive limits appear. AI systems, especially those deployed by companies like Google, Amazon, and Microsoft, are explicitly designed to operate in such environments.

A well-known benchmark cited by McKinsey & Company shows that data-driven decision systems outperform human-only decisions by 20–30% in complex operational settings, particularly where signals conflict or change rapidly.

Complexity is where AI stops being optional.

Pain Points: Where Humans Struggle With Complexity

1. Cognitive Bandwidth Limits

Humans can actively process only a limited number of variables at once.

Why it matters:

Modern systems often involve thousands.

Consequence:

Oversimplification and missed signals.

2. Bias and Emotional Interference

Human decisions are shaped by experience, fear, and incentives.

Impact:

Inconsistent outcomes under pressure.

Real example:

Risk teams ignoring early warning signs due to “false alarm fatigue.”

3. Inability to Learn at Machine Speed

Humans learn slowly from experience.

Result:

Delayed adaptation to new patterns.

4. Fatigue and Attention Drift

Humans degrade over time.

Reality:

Complex systems do not slow down.

5. Poor Pattern Recognition at Scale

Humans excel at stories, not multidimensional patterns.

Outcome:

Hidden correlations remain invisible.

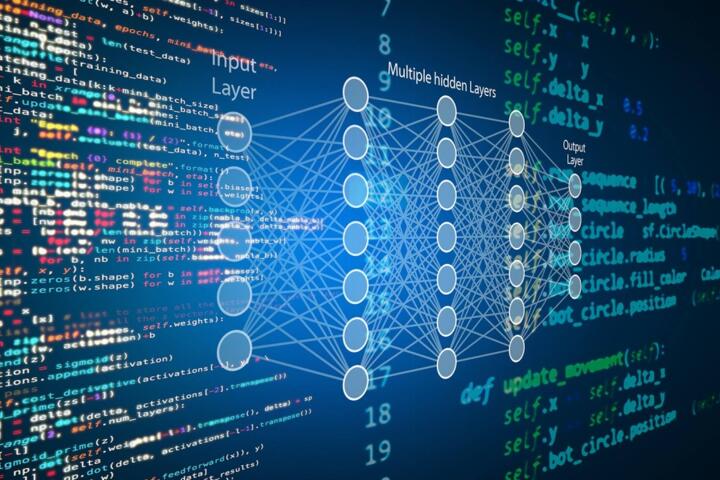

Why AI Handles Complexity Better

Parallel Processing

AI evaluates thousands of variables simultaneously.

Why it works:

No cognitive bottleneck.

In practice:

Fraud detection models analyze millions of transactions in milliseconds.

Probabilistic Reasoning

AI operates on likelihoods, not certainties.

Benefit:

Graceful handling of uncertainty.

Example:

Demand forecasting models updating predictions continuously.

Continuous Learning

Modern models improve with new data.

Result:

Systems adapt faster than teams can retrain.

Consistency Under Pressure

AI does not panic or tire.

Impact:

Stable decision quality over time.

System-Level Optimization

AI optimizes globally, not locally.

Human limitation:

People focus on immediate wins.

Solutions and Recommendations With Concrete Guidance

1. Delegate High-Dimensional Decisions to AI

What to do:

Use AI where decisions involve many variables.

Why it works:

Humans are not built for dimensionality.

Tools:

-

predictive analytics platforms

-

real-time decision engines

Result:

Higher accuracy, lower variance.

2. Keep Humans for Judgment and Ethics

What to do:

Let AI decide “what,” humans decide “should.”

Why it works:

AI lacks moral context.

In practice:

Human approval layers in sensitive domains.

3. Use Ensemble Models for Complex Systems

What to do:

Combine multiple models.

Why it works:

Reduces single-model bias.

Outcome:

More robust predictions.

4. Monitor Drift, Not Just Accuracy

What to do:

Track changes in input distributions.

Why:

Complex environments evolve.

Result:

Earlier intervention.

5. Train Teams to Interpret AI Outputs

What to do:

Focus on decision literacy, not coding.

Impact:

Better trust and adoption.

Mini-Case Examples

Case 1: Supply Chain Complexity

Company:

Global retail distributor

Problem:

Human planners could not adjust to real-time disruptions.

Solution:

AI-driven demand and routing models.

Result:

-

18% reduction in stockouts

-

12% logistics cost savings

Case 2: Financial Risk Management

Company:

Regional bank

Problem:

Risk officers missed subtle correlations.

What changed:

ML-based credit risk modeling.

Outcome:

-

25% reduction in defaults

-

Faster loan approvals

Comparison Table: AI vs Humans in Complex Decision-Making

| Aspect | Humans | AI Systems |

|---|---|---|

| Variables handled | Dozens | Thousands+ |

| Fatigue | High | None |

| Bias | High | Model-dependent |

| Learning speed | Slow | Continuous |

| Consistency | Variable | Stable |

Practical Checklist: Using AI for Complexity

-

Identify decisions with >50 variables

-

Measure human error rates

-

Introduce AI as advisory first

-

Validate with historical data

-

Add human oversight

-

Monitor drift and bias

-

Iterate continuously

Common Mistakes (and How to Avoid Them)

Mistake: Expecting AI to replace judgment

Fix: Use it to augment, not replace

Mistake: Feeding poor data

Fix: Data quality audits first

Mistake: Blind trust in outputs

Fix: Explainability and monitoring

Mistake: Ignoring edge cases

Fix: Human-in-the-loop design

FAQ

Q1: Does AI always outperform humans?

No. Only in high-complexity, data-rich scenarios.

Q2: Is AI less biased than humans?

Different bias, not no bias.

Q3: Can small companies benefit?

Yes, especially where teams are overloaded.

Q4: Does complexity mean automation is risky?

It means manual decision-making is riskier.

Q5: What skill matters most?

Understanding system behavior, not algorithms.

Author’s Insight

In real projects, the biggest mistake is assuming complexity can be “managed” with experience alone. I have seen AI outperform expert teams not because it was smarter, but because it never stopped paying attention. The strongest results come when humans focus on meaning and accountability, while AI handles the overwhelming math underneath.

Conclusion

AI handles complexity better than humans because it is built for scale, uncertainty, and continuous learning. The future of decision-making belongs not to AI alone, but to systems where machines manage complexity and humans provide judgment.